Counting input cells

- Sep. 26, 2021

Introduction

In the past, I wrote Turbo Integrator routines to clear input cells from cubes. This might be because the data is outdated, just 'wrong' or for memory reasons. Refer to this article for all the details. However, we do not clear entire cubes: the function CubeClearData (refer to this article and gosh, that's been 10 years ago !) can do this so not much scripting is needed :-)

The question then arises: which data can we clear, which data should remain. The business/key persons within the TM1 model or organization will most likely tell you. But if they cannot, or you want to dig deeper in just how many different cells we are talking about, then the code in this article will be very useful to you.

The idea is that TM1 models have data spread out by year/month (of course) but also by scenario and/or version. Scenarios typically refer to actual, budget, a (monthly, quarterly) forecast, even budget last year, variances between scenarios, you name it. Versions can be even more abundant: final data, a working version for my scenarios, freeze versions, what if versions, best case/worst case, etc. Needless to say, some cubes can pile up a lot of data as time arises and good usage is made of TM1.

There is a different scenario too (no pun intended). Say that the TM1 model has only a handful of scenarios and versions, but due to user activity the data is really dispersed over millions of combinations. High-level input would be spread over base cells, which can easily run into millions of cells. Data input of let's say an adjustment at a highly aggregated level in the cube can cause lots of issues regarding memory consumption, TI processes coming to a halt, restart and backup times exploding, reading data from the cube takes a long time.

Identify heavy slices

Where are those heavy data slices ? Obviously, you could try to do it manually. This can take time as well as send the memory consumption to high levels. You just do not show all level 0 cells in a cube because the resulting "output" is difficult to manage. Think about having 15 million rows in a cube view: that's not going to work, is it ? The built-in control cubes in TM1, including }StatsByCube, do not have the level of data that we require for this analysis, hence my approach of scripting the work: it can easily be applied to any TM1 model out there.

Turbo Integrator to the rescue

As you are used, here's my full solution. Create a new process, no parameters, only Prolog tab code:

# create a new measures dimension and element

If( DimensionExists( 'Count cells' ) = 0 );

DimensionCreate( 'Count cells' );

EndIf;

If( Dimix( 'Count cells', 'Count cells' ) = 0 );

DimensionElementInsertDirect( 'Count cells', '', 'Count cells', 'N' );

EndIf;

# c loops over the application cubes

c = 1;

While( c <= Dimsiz( '}Cubes' ));

vCube = Dimnm( '}Cubes', c );

If( Subst( vCube, 1, 1 ) @<> '}' );

If( Scan( '_Count cells', vCube ) = 0 );

vCube_New = vCube | '_Count cells';

AsciiOutput( Expand( 'Count cells - start of cube %vCube%.txt' ), vCube_New );

If( CubeExists( vCube_New ) > 0 );

CubeDestroy( vCube_New );

EndIf;

ExecuteProcess( 'Bedrock.Cube.Clone',

'pSourceCube', vCube, 'pTargetCube', vCube_New,

'pIncludeRules', 0, 'pIncludeData', 0,

'pSourceView', '', 'pRuleValues', 0, 'pDebug', 0 );

CellPutS( 'NO', '}CubeProperties', vCube_New, 'LOGGING' );

ExecuteProcess( 'Bedrock.Cube.Data.Copy',

'pCube', vCube, 'pViewSource', '', 'pViewTarget', '', 'pDimension', '',

'pSourceElement', '', 'pTargetElement', '',

'pSkipRules', 1, 'pZeroTarget', 0,

'pZeroSource', 0, 'pDestroyTempObj', 1, 'pDebug', 0 );

EndIf;

EndIf;

c = c + 1;

End;

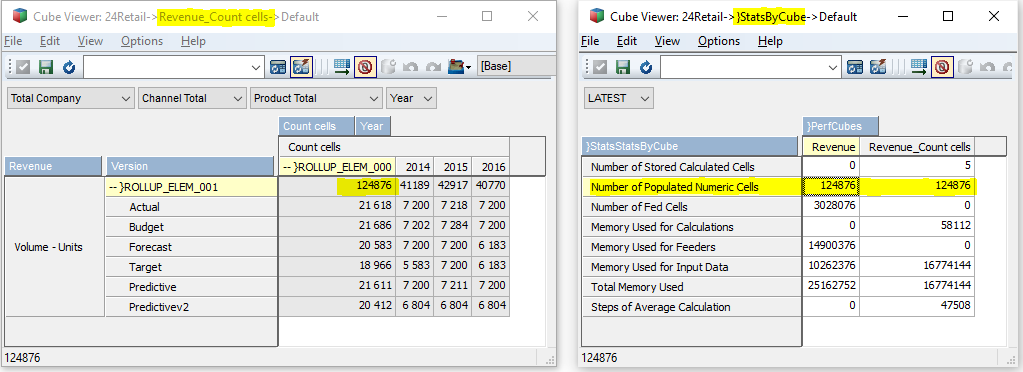

This code loops over all model/application cubes. For each cube, a new cube is created, appropriately suffixed. The new cube shares the exact same level of detail as the "base" cube. Then the input cells are copied over to the new cube, stored on a measure called "Count cells" in a new dimension. When the process has finished you can ask for the total number of cells in every slice of the cube. It could tell you that your current forecast working version has 10 million base level cells that are non-zero !

An analysis like this supposes that you also had a look at the TM1 performance monitor. Cubes that are not big can be excluded from the analysis and save time.

The code calls a number of existing Bedrock processes. IMPORTANT:

- These are Bedrock V3 processes. The Bedrock TI library greatly helps in creating modules and have TI processes act as building blocks to not reinvent the wheel every time. Please download the library or stick to the processes near the top of this page.

- These processes have been edited by myself because there is no Bedrock process to do exactly what we need here. So the easiest way, instead of scripting from scratch if you have too much time on your hands, is to edit the Bedrock processes that come closest.

Attention points

- Do this exercise on a development / testing server ! It should have plenty of memory available, since effectively, the number of base cells (level 0) will double ! No rules are applicable, though, if you take out the rux files (see below).

- Remove the rux files from the TM1 data directory before launching the model.

- The whole process can take quite a long time to run and complete the data transactions. Be prepared for this.

- Take action when the results indicate that some cubes / slices within cubes, contain way too many cells. Ask key users to clean out their data, to the benefit of all users ! (improved waiting times, memory consumption, etc.)

- Bedrock V3 was used here, doing this for Bedrock V4 is left for the reader as an exercise. There is a process for intercube copy data which will be closer to the end result than the copy data process I used. Other interesting features like parallel execution and temporary views and subsets will be an advantage too. I could not use Bedrock V4 in this setup, otherwise I would have done so.

Enjoy the rest of the weekend !